Ravi & Emma

Helping a computer recognise 14 Australian Sign Language (Auslan) signs

Overview

Ravi & Emma will be an interactive documentary, told in Auslan, to illuminate the richness of Deaf culture and the complexity of Auslan through the story of two young Australians living in London. Through the project, people who may never have signed before, will have the opportunity to experience a small part of this complex and rich language. Audiences will navigate through the story by signing a selection of Auslan signs and their webcam.

This data capture website was built to collect videos of people signing a selection of Auslan signs, and support the training efforts to develop a machine learning model that can recognise a specific set of 14 Auslan signs.

Deaf-led from the start

The project is being made in collaboration with Ravi Vasavan and Emma Anderson, and being developed with Deaf storytellers, linguists and academics. The selection of signs was also informed by what would work within the constaints of gesture recognition technology.

“At its most effective, digital storytelling is a powerful tool of emancipation, revelation and discovery to maker and viewer alike.”

— Catherine Boase

Project Goals

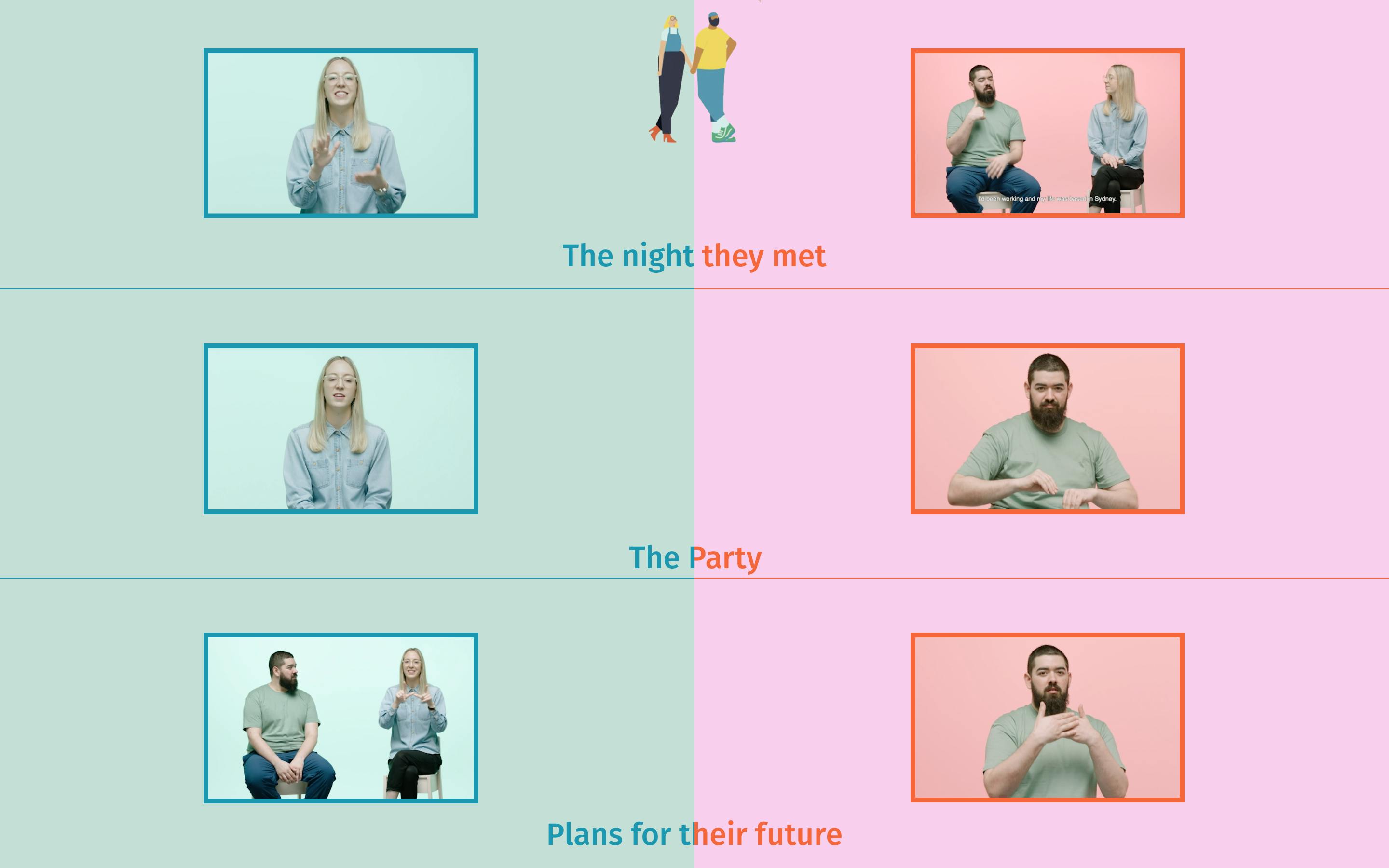

The interactive documentary is intended to illuminate the richness of Deaf culture and the complexity of Auslan through the personal story of Ravi, who is Deaf, and Emma, who has hearing.

Allow audiences to experience Auslan

Work with Deaf storytellers and the Auslan community to invite audiences to experience Auslan.

Two Perspectives

Audiences can control which perspective of the story to view. They can switch to the perspective throughout the story by signing either name at any time.

Rewarding Narrative Beats

An engaging experience that allows users to follow the same story arc from both characters.

Recognising Diversity

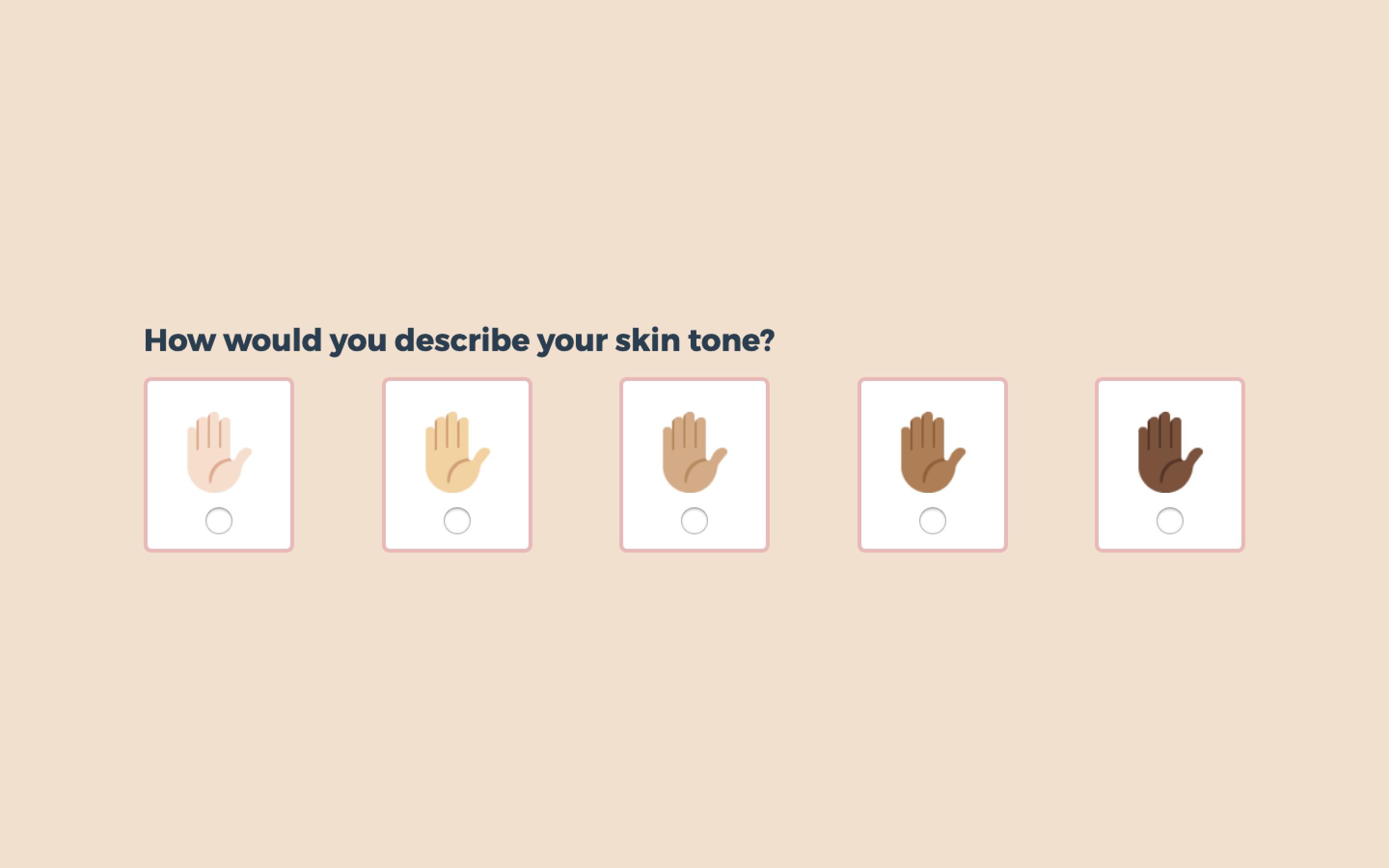

The data collection process was intentional and deliberately planned to have a wide coverage of demographic characteristics and environmental factors such as age, skin tone, lighting, clothing and appearance.

Preparing the data for training

Each sign was classified and scored by a human, against a baseline developed with the Auslan community for form and timing. These videos and scores were subsequently used to train a machine learning model to classify the signs. We also included training data where users were doing something other than signing to accurately detect negative scenarios.

Technical Implementation

The website, and an internal moderation tool, was built using Vue.js. I wrote a Vue plugin that wraps the MediaRecorder in modern web browsers, and sends a stream of data through websockets to a Node.js server running in AWS EC2. DynamoDB and S3 were used for database and storage respectively.

Awards

The final implementation won the 2022 Walkley Innovation award.