Sound+Vision

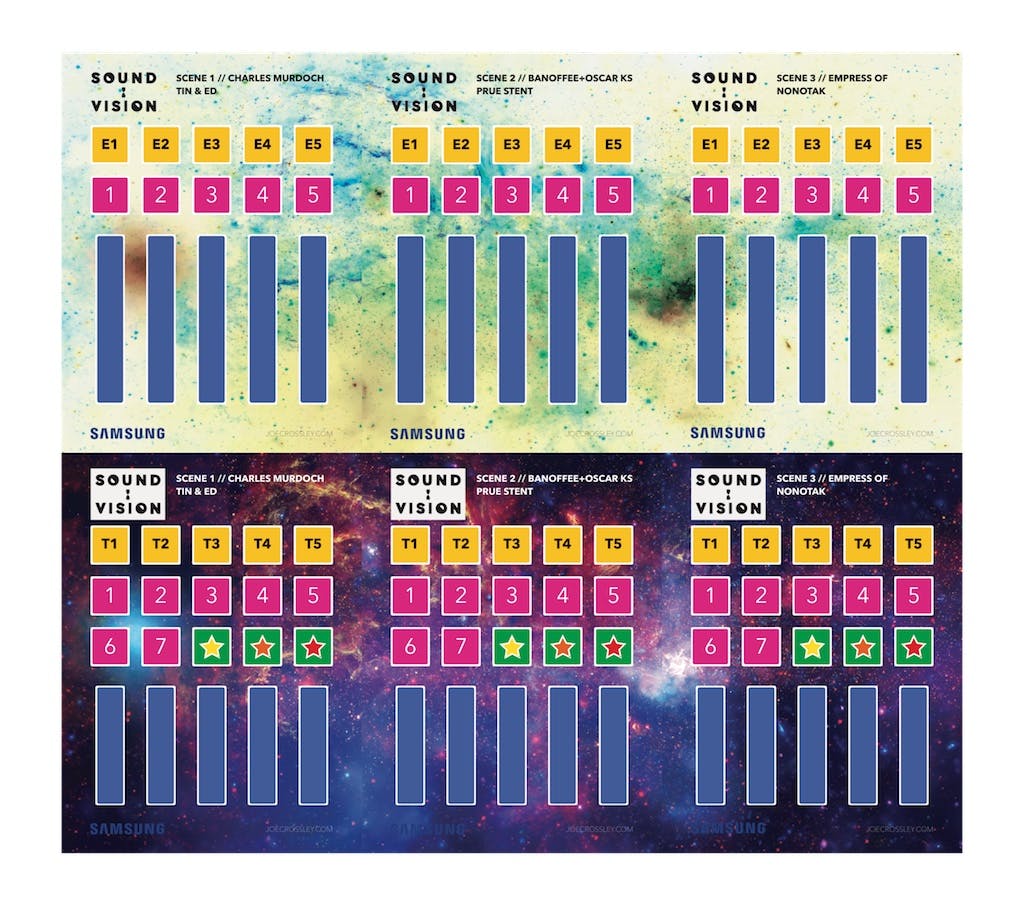

An Android application to control the sound and visuals in a live performance at the Sydney Opera House.

Overview

I developed a native Android application that artists used to control the sound and visuals during the live performance. The event had a 3 parts, and each was controlled using 2 tablets. The app was built using openFrameworks.

An Adaptive Interface

A single application reads a layout file that resides on each individual tablet, and draws the interface based on the contents. This drastically sped up development and deployment, as it was no longer necessary to keep track of each tablet and develop separate applications.

Messaging with OSC

The tablets sent messages to a media server using the Open Sound Control (OSC) protocol. This enabled a mechanism that was flexible and well-suited for real-time control of media processing.

The server was notified whenever a toggle was turned on or off, and the value of the sliders in real time.